A practical guide to DORA metrics

Tempo Team

What DevOps team doesn’t want to release faster and recover quicker when things go wrong? DORA metrics break that goal into four categories: how often code goes out, how long changes take, how frequently failures happen, and, when they do, how quickly systems bounce back.

Before these metrics existed, teams argued over what “good” looked like. Now, they use hard data to separate opinion from reality and show the path to real improvement. And those stats don’t just sit in dashboards. They determine whether customers stay loyal – and if teams can keep up in a market where speed is survival.

In this guide, we’ll explain each DevOps metric and how it contributes to a high-performing development team.

What are DORA metrics?

DORA metrics are a set of key performance indicators (KPIs) that track how quickly and reliably development teams deliver software. Created by the DevOps Research and Assessment (DORA) group at Google Cloud, they’ve become the gold standard in DevOps for measuring speed and quality. Often shown on a project dashboard, they give teams quick visibility into problem areas and help guide small adjustments in real time.

What are the 4 DORA metrics?

In DevOps, four key metrics define team performance, each focusing on a different part of the software delivery process: deployment frequency, lead time for changes, change failure rate, and mean time to recovery.

1. Deployment frequency (DF)

Deployment frequency refers to how often a team pushes code into production. It reflects process efficiency and encourages smaller, more frequent updates that keep developers competitive and customers engaged.

This metric also defines a team’s ideal release rhythm. For instance, many high-performing teams ship code one to three times per week. By tracking deployment frequency, you can compare those averages against your own product goals, team capacity, and customer expectations to find the cadence that works best.

2. Lead time for changes (LT)

Lead time for changes – sometimes called mean lead time – is the duration from commitment to a complete project outcome. Similar to flow time in SAFe reporting, it tracks the span from when work enters the pipeline to when it reaches users. Knowing how fast or slow your team is working helps keep a steady pace and ensure delivery is consistent.

For example, if lead time runs a week and customers complain about slow updates, you might respond by adjusting team capacity, adding automation, or tightening code review cycles.

3. Change failure rate (CFR)

Change failure rate is the percentage of deployments that lead to failures, whether errors, downtime, or defects. It’s calculated by comparing total incidents against total deployments, which highlights whether quality is keeping up with speed.

CFR works as a counterbalance to velocity metrics like lead time and deployment frequency. A team may release quickly, but if half of those releases fail, they’ll lose time fixing bugs and frustrate customers in the process.

4. Mean time to recovery (MTTR)

Mean time to recovery, sometimes called time to restore service, captures how long it takes to resolve an incident once it’s identified. MTTR helps teams prepare for the inevitable and recover with minimal disruption.

What’s “good” recovery time depends on context. A one-day turnaround might be acceptable for a minor bug, while a security issue should be resolved in under an hour.

What are the benefits of DORA metrics?

By tracking and reporting KPIs, DORA metrics strip performance down to the basics so teams can build on what works and fix what doesn't. Here's a closer look at their primary benefits.

Provide objective performance measures

DORA metrics give an unbiased look at your process, output, and response, which you can compare with industry standards to see how you stack up and set purposeful targets. This also gives you a clear understanding of stability and speed, as well as a solid baseline for measuring progress as your production grows.

Highlight process bottlenecks

Examining the quality and frequency of your entire development process lets you pinpoint specific bottlenecks and take action. If customer reviews mention long downtimes during maintenance, DORA metrics may confirm high MTTR, but it could also reveal a high CFR that’s driving repeated outages and interruptions. With this insight, you can address both issues proactively.

Improve speed and quality

DORA metrics balance two forces that often pull against each other: velocity and stability. Pushing for speed alone can hurt quality, while focusing only on quality slows delivery, which impacts profitability, market edge, and brand reputation. These KPIs give you an overview of every major performance angle so you can build a well-balanced strategy.

Align teams on common goals

These key metrics create a benchmark for all your teams, including operations, business, and development. By using DORA metrics, each group can see how their work fits into the larger process, which ultimately breaks down barriers and fosters collaboration.

For example, low deployment frequency might lead development teams to release code faster, while showing business teams that product roadmaps need to reflect actual production pace.

How are DORA metrics calculated?

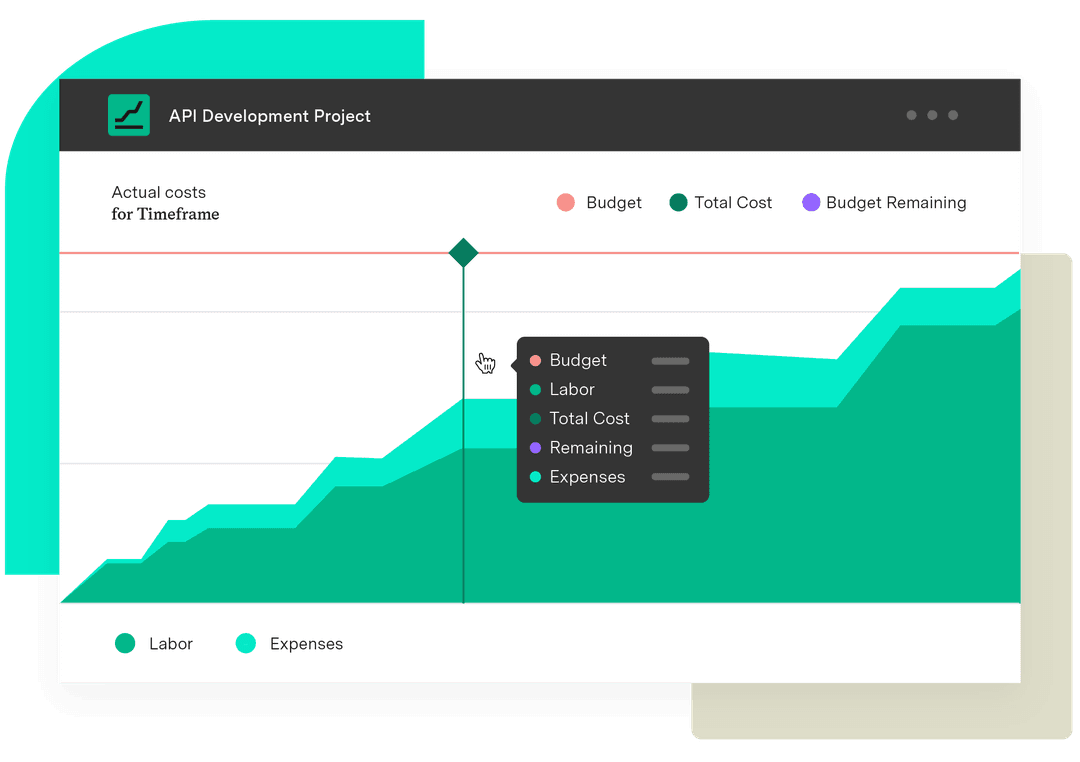

Here’s a quick overview of how to collect data for each DORA metric. To keep the process simple, it’s best to track results in KPI software like Tempo, which includes real-time insights from Jira.

Calculating deployment frequency

Determine your deployment frequency by dividing the number of deliveries in a set period by the number of days in that period. For example, if you release code 15 times in 30 days, the result is 0.5.

Calculating lead time

Define the start and end points of your development pipeline. Measure the time for each change, and then do the same for all items over a given period. Average the results to find your lead time.

Calculating change failure rate

CFR follows a similar approach to deployment frequency. Divide the number of failures by the total number of deployments in a period. For instance, if you deliver 12 deployments over four weeks and three fail, CFR is 0.25.

Connect each incident to a deployment to assess the number of deliveries with at least one failure. This gives a broad idea of development quality, while multiple incidents from a single deployment may point to a rare one-off error.

Calculating MTTR

Find your mean time to recovery by dividing the total hours spent repairing by the number of incidents. If your team handled four incidents in a month and spent 10 hours fixing them, MTTR would be 2.5.

Connecting DevOps performance to business value with Tempo

DORA metrics reveal how your team performs, and Tempo shows what that performance means in business terms that matter. Financial Manager analyzes key budget metrics, helping you visualize the true cost of downtime and adjust budgets with confidence. Timesheets tracks lead time, time spent, and MTTR, making it easy to log and report data in a few clicks. It also separates CapEx from OpEx, so you can plan smarter and maximize deductions.

Take the numbers out of dashboards and turn them into results – with Tempo.